Would it be a good idea to use containers on an embedded Linux system?

Some time ago I wrote an introductory article on Linux containers. I mentioned in the article that there are already several embedded Linux distributions based on containers, including Linux microPlatform from Foundries.io, Torizon from Toradex and balenaOS from balena.

What these projects do is provide a Linux distribution with a Docker based infrastructure to run and manage containers. The idea is to install the distribution on your hardware platform and develop/run your applications inside container images.

At the end of the article, I left some questions open. Do we have enough hardware resources to run containers on embedded systems? How to easily package a cross-compiled application and its dependencies into a container image? How to manage the license of software artifacts (base operating system, container images, etc)?

In this article, I will talk about these and many other challenges. But let’s start with the motivations.

Why would we use containers on an embedded Linux system?

Motivations for using containers

Frustration with build systems can be a major motivation for using containers. Many developers, especially those coming from the world of microcontrollers, find it difficult to understand the concepts and tools provided by a build system like Buildroot or the OpenEmbedded/Yocto Project. Developers sometimes want to avoid the hassles of building everything from sources. It is much more comfortable and productive to work with a ready-made Linux distribution. The work and learning curve to create and maintain a customized Linux distribution using a build system is much higher compared to creating simple container images.

Using containers, the development time is reduced. The focus is on application development, and less time is spent on creating and maintaining the infrastructure to run the application. Extending the distribution with new applications can take minutes by just adding new container images to the system. There are already several ready-to-use container images available in Docker Hub that could be used by the developer. So product development can be much more productive using containers.

Devices are more and more often part of a complex solution, and a container-based system can facilitate the creation and maintenance of the necessary infrastructure to run the device’s applications. The main components of the system (user interface application, web server, database server, network tools, etc) can be divided into containers, facilitating the management of the system’s “building blocks” (installation, uninstallation, configuration, updates, etc).

In particular, containers facilitate the implementation of a more robust, fast and fail-safe update system. As the image of a container is easily distributable, the transfer of very large files over the network is avoided, helping devices with limited connectivity. Also, stopping and starting a container is very simple, which also makes updating the system easier. With a lower risk of update problems, there is a natural tendency to update the system more often, improving product quality and security. Consumers have more confidence in companies that respond quickly to security issues.

Containers facilitate the partitioning of the system’s hardware resources. This way, we can have fine control over the application’s use of CPU, memory and I/O. And by splitting functionality between process running inside isolated containers, we can limit each process’s permissions and control communication between them, reducing attack vectors and improving the security of the product.

Imagine the situation where a legacy application needs an old version of a given library. Creating and maintaining an execution environment that supports different versions of the same library can be laborious. Since in containers the application is packaged together with their dependencies, this problem is easily solved.

Containers also encourage the development of a more modular system, improving portability and reuse. A container image is reusable and could be deployed in different products and devices (based on the same hardware architecture with a compatible kernel).

The reality is that with containers we bring the technology of microservices and the culture of DevOps to the world of embedded systems!

But…

We still have some challenges ahead!

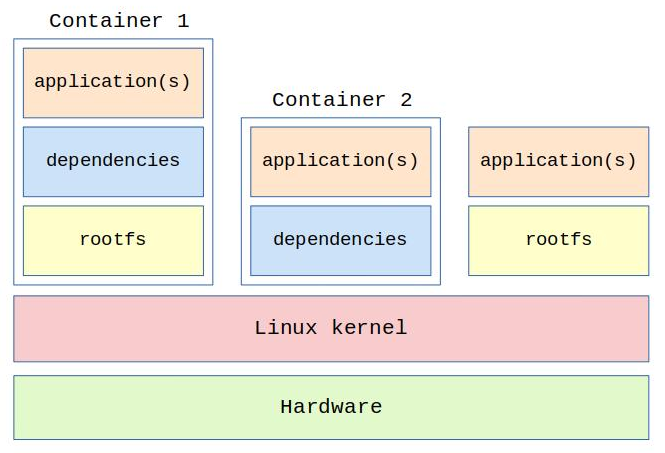

One of the challenges is the increase in the consumption of hardware resources (CPU, memory, I/O). The base Linux distribution should have all the components needed to manage and run container images (the Docker engine for example). As each container image is an isolated execution environment, we will have many duplicated libraries inside the containers, requiring more storage (NAND flash, eMMC, etc) and RAM (during execution). To solve this problem, container images need to be developed with a focus on saving resources.

Another problem could be wearing out the flash memory with frequent writes to the file system. An embedded system usually has a lifetime of many years (10+ years), and if the Linux distribution (including the container images) is not concerned with this limitation, in a short time the flash memory may start to fail and corrupt the file system. There is no secret here, the solution is to minimize writes to the flash memory device.

Creating a container image with cross-compiled binaries for the target platform is also not trivial. For example, how to create on the host (x86-64 development environment) a Docker container image compiled for ARMv7 with the Qt5 libraries, glibc, libusb and a Qt5 application? Tools like Docker Buildx could help, but the generated code may not be optimized for the target. A build system (Buildroot, OpenEmbedded/Yocto Project) could also be a solution, but it would bring the fact that one of the motivations for using containers is avoiding a build system and its learning curve.

This is a problem that vendors of container-based Linux distributions will need to solve. For example, Toradex is solving this problem on Torizon through a plugin for Visual Studio. With a single click, the plugin is able to compile an application, package it in a Docker container image, deploy it to the target and run the container!

Another challenge is creating and deploying the final image of the system. From a development point of view, the process is simple. We install a base image on the hardware platform and develop applications inside containers. But how to manage changes in the base image? And how to create the final production image integrating the base Linux distribution and all container images? The provisioning of the final image in production is an important issue.

A problem with no apparent solution is the management of software licenses. Having a product based on free software, one of the obligations of the supplier (manufacturer of the device) is to provide its customers a list of all free software projects used, their licenses and the source code (depending on the license). But how to identify and consolidate all the license compliance information of the base Linux distribution and all container images?

To develop a safe and efficient product based on containers, engineers will need to delve into this type of solution. Understanding how containers work and how to configure and use Docker will be essential.

Despite making the update process easier, containers can make it difficult to manage the versions of devices in production. Imagine a product with 3 container images. Since we can update any container image independently, we could have several combinations of container versions on devices in the field. What if container A version 1.0 does not work with Container B version 2.1? Would we break the system if we update container C to version 3.2? Can you see where I am going? We need a well-placed update system to help manage all the versions and dependencies between the container images and the base Linux distribution of the devices in the field. And certifying a product with containers that frequently update can also be a problem.

So what does all this mean?

Conclusion?

Are containers the future of embedded Linux systems? I don’t really know. In this article, I tried to raise the advantages and challenges to start a discussion on the subject.

A solution with containers on embedded Linux is innovative and a paradigm shift, bringing the world and the culture of the server to the edge. It is a good option for rapid prototyping. And in some specific situations, for example to develop on an execution environment that depends on different and incompatible versions of the same libraries, containers can be the best option.

But I must confess that I still have some mixed feelings about it. A reasonable percentage of embedded Linux projects are simple and can be solved with Busybox plus some libraries and applications. I feel very comfortable to prepare a simple distribution with Buildroot or OpenEmbedded/Yocto Project and develop the applications on top. I like the control I have over the final product and the reproducibility of the system. I also come from a time when every byte and each CPU cycle had its importance, so a solution with containers, although productive from the development point of view, seems quite inefficient.

But more and more hardware is a commodity, right? And I understand the trade-offs.

Maybe I really need a paradigm shift to better understand how this culture of microservices and DevOps can be applied to the use of containers on embedded Linux systems. I’m getting ready for this future, waiting for an opportunity to put it into practice and see the result. Only time - and the market - will tell. ;-)

About the author: Sergio Prado has been working with embedded systems for more than 25 years. If you want to know more about his work, please visit the About Me page or Embedded Labworks website.

Please email your comments or questions to hello at sergioprado.blog, or sign up the newsletter to receive updates.