Container technology in general and Linux containers, in particular, have become increasingly popular in recent years.

The use of containers in data centers and cloud computing environments is growing every year and tools like Docker and Kubernetes have become quite popular.

And it’s no different in the world of embedded Linux since some container-based Linux distributions for embedded systems like balenaOS and Linux microPlatform have started to appear in recent years.

But what are containers? What problems do they solve? What technologies are involved? Should we use containers to deploy applications on embedded systems? I will try to answer these and many other questions in this article!

Why do we need Linux containers?

So you are developing an application that has multiple dependencies. The application may have dependencies on libraries, configuration files or even other applications.

Now imagine that you need to distribute this application to different environments. For example, you are developing on Ubuntu 18.04 and want to deploy the application on machines running Fedora, Debian or even another version of Ubuntu.

How to make sure that the execution environment of these different distributions meets the dependencies of your application?

One way to solve this problem is to distribute the source code of the application with a build system like autotools or cmake to verify and notify the user if any dependency is not met. Along with the source code, you can distribute a manual (e.g. README) documenting the application installation process, including procedures to install its dependencies.

It works, right? But anyone who has ever needed to develop and distribute applications that must run on multiple GNU/Linux distributions knows the work it takes to create and maintain the build system configuration files as the application changes over time.

Also, documenting the application installation process and its dependencies will also be quite tedious. For example, if you want to support 10 different Linux distributions, you will probably need to include in this manual the procedures for installing the application on all these different distributions!

It is a solution that does not scale. Not to mention that you will force the user to install several dependencies that they might not need, which may even cause problems in their environment.

Can you see the problem here? There is no isolation between the runtime environment and the application (and its dependencies), making the process to deploy applications on GNU/Linux distributions very difficult and sometimes painful!

To solve this problem we can virtualize the application execution in a virtual machine. This way, it would be enough to create a virtual machine image with all application dependencies and run this image with a virtualization tool like QEMU, VirtualBox or VMWare Workstation Player.

However, a virtual machine is very heavy. It runs a fully isolated instance of the operating system (kernel and root filesystem), dramatically increasing resource consumption (CPU, memory, I/O).

Why don’t we run this root filesystem isolated from the system, but over the same kernel? And why don’t we remove from this root filesystem all applications and libraries not needed to run the application? That is a Linux container in a nutshell!

What are Linux containers?

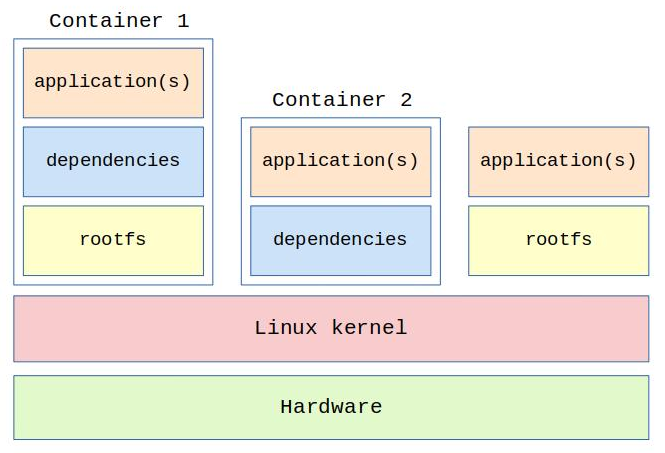

A Linux container is a minimal filesystem with only the required software components to run a specific application or group of applications, and the kernel is able to “run” the container completely isolated from the rest of the system.

A Linux container can solve the problem of distributing applications to environments with different configurations. And unlike the virtual machine solution, a containerized solution is lighter and less resource intensive since a container image is smaller than a virtual machine image, and the kernel is shared with other processes and containers running on the operating system.

So a Linux container is an instance of the user space layer, it has a number of resources allocated to it (CPU, memory, I/O) and runs in isolation from the rest of the system. A container can run just one application or the entire root filesystem (including the init system). You can also start and run multiple containers at the same time.

If you access a terminal inside a container and run the ps command, you will only see the container processes. If you run the mount command, you will only see the container mount points. If you run ls, you will only see the container filesystem(s). If you run the reboot command, only container applications will restart, and it may take less than 1 second!

Advantages on using Linux containers

A container facilitates the distribution of applications and enables their execution in totally different environments.

The process of updating applications is also simplified since to update an application and its dependencies it is just a matter of updating the container image.

A containerized solution scales much better since you can run and control multiple instances of a container at the same time.

The safety factor is also important. If properly configured, a container can run completely isolated from the host operating system, increasing system security.

Finally, containers have better performance and use less resources compared to a virtual machine solution.

How Linux containers are implemented?

The container implementation uses several Linux kernel features, including cgroups and namespaces.

Control groups or cgroups is a very interesting Linux kernel feature that allows to partition system resources (CPU, memory, I/O) by process or group of processes.

The namespaces feature, on the other hand, make it possible to isolate the execution of a process on Linux (PID, users, network connections, mount points, etc).

Using these and some other features, it is possible to create a completely isolated execution environment for applications on Linux, and that’s where the tools come in.

Tools and standards

Several tools are available to work with containers on Linux including LXC, systemd-nspawn and Docker.

LXC is a userspace interface for the Linux kernel containment features. With simple command-line tools, it allows Linux users to easily create and manage system or application containers.

Systemd-nspawn (part of systemd) is a very simple and effective command-line tool to run applications or full root filesystems inside containers.

Docker is the most popular container management tool as I write this article. Developed by Docker Inc, it is friendly and with more features compared to lower-level tools like LXC and systemd-nspawn. There is even a public repository of containers called Docker Hub.

Finally, we have Kubernetes, an open-source container-orchestration system for automating application deployment, scaling, and management. While Docker and LXC are capable of creating and running containers, Kubernetes is able to automate the management of containers.

There is a Linux Foundation project called Open Container Initiative that aims to set and maintain open standards for container technology in Linux, and there are currently two specifications, a runtime specification that defines standards for managing container execution and an image specification that standardizes the format of container images.

But what about using containers on embedded systems?

Containers on embedded systems

Although containers are not a new idea, their use has multiplied in recent years, and now several container solutions for embedded Linux are available, including balenaOS, Linux microPlatforms, Pantahub and Torizon.

There are still many challenges and problems that need to be addressed in the embedded field. Do we have enough hardware resources to run containers on embedded systems? How to easily package a cross-compiled application and its dependencies into a container image? How to manage the license of software artifacts (base operating system, container images, etc)?

In the next article, we will focus on the use of Linux containers on embedded systems and discuss these and other challenges in much more detail.

About the author: Sergio Prado has been working with embedded systems for more than 25 years. If you want to know more about his work, please visit the About Me page or Embedded Labworks website.

Please email your comments or questions to hello at sergioprado.blog, or sign up the newsletter to receive updates.